The CFO’s dilemma: balancing AI acceleration with audit integrity

The initial hype surrounding artificial intelligence in corporate finance has settled. We have moved past the "wow" phase of generative text and are...

5 min read

CtrlPrint : January 30, 2026

.jpg)

The initial hype surrounding artificial intelligence in corporate finance has settled. We have moved past the "wow" phase of generative text and are now facing the stark reality of operational implementation. For finance leaders, the mandate is no longer just to "explore" AI; it is to deploy it safely within one of the most highly regulated environments in the corporate world: the annual report.

However, a dangerous gap has emerged. While teams are eager to use Large Language Models (LLMs) to draft narratives and analyse data, the traditional tools used for reporting (static spreadsheets, disconnected documents, and email chains) are ill-equipped to govern these new capabilities.

The challenge is not accessing intelligence; it is orchestrating it. The successful integration of AI into corporate reporting requires a shift in mindset: from viewing AI as a universal tool to viewing it as a specific accelerator that must be contained within a secure, governed platform.

To understand where AI fits – and where it doesn't – we must first analyse the friction points in the modern "last mile" of reporting. Today’s reporting cycle is often bottlenecked by three distinct pressures, each of which requires a different technical solution:

Attempting to solve all three with a "generative AI" blanket is risky. Instead, leaders need a layered architecture that applies the right technology to the right problem.

The most significant threat to audit integrity today is not AI hallucination – it is the broken chain of custody.

In a typical scenario, a junior analyst might copy financial data into a public, browser-based AI tool to generate a liquidity summary. They then paste that summary back into a Word document or an InDesign file. In that split second of copy-pasting, the data lineage is severed. The prompt history is lost. The source data is potentially exposed to the public cloud.

This is "shadow reporting". It creates a final document that looks professional but lacks the evidentiary backing required by auditors. If a regulator asks why a specific risk factor was downplayed, and the answer is "the algorithm suggested it", the audit committee is left exposed.

As AI becomes embedded in reporting workflows, the discussion often focuses on what the technology can do — summarise documents, answer questions, draft narrative disclosures. Far less attention is paid to where the AI operates, even though this is where the most significant security risks arise.

Many software vendors now promote “chat with your PDF” functionality. While this may be adequate for generic documents, it is fundamentally insufficient for complex corporate reports such as annual reports, sustainability reports, or regulatory filings. These documents contain highly sensitive information, including Material Non-Public Information (MNPI), forward-looking statements, and draft disclosures that are subject to strict governance, audit, and approval processes.

The primary risk is not the AI model itself, but the environment in which it is deployed. Free or browser-based AI tools often operate outside controlled enterprise systems. Data may be transmitted to external servers, logged for quality monitoring, or retained to improve underlying models. Even when vendors claim data is “not stored,” the lack of contractual guarantees, audit trails, and access controls creates unacceptable exposure for corporate reporting teams.

In contrast, when AI is embedded within a governed reporting platform, security and compliance controls remain intact. The AI operates inside the same secure perimeter as the reporting data, inheriting existing permissions, role-based access, version control, and audit logging. Critically, data is not used to train public models or shared beyond the organisation’s defined security boundary.

This distinction is particularly important in the reporting lifecycle. Draft disclosures evolve over time, and early versions often contain assumptions, estimates, or incomplete information. If these drafts are exposed through unsecured AI tools, organisations risk information leakage, regulatory breaches, or reputational damage — even if the final published report is compliant.

Ultimately, the safe use of AI in corporate reporting is less about adding intelligence and more about preserving control. AI can enhance efficiency and insight, but only when it is deployed within an environment designed for regulated, high-stakes information. In corporate reporting, where AI lives determines whether it is a productivity enabler — or a material security risk.

Security is paramount. When using AI within a governed platform like CtrlPrint, your data is not being used to train a public model – a massive risk associated with free, browser-based tools. This ensures that your Material Non-Public Information (MNPI) remains within a secure boundary.

To solve this, organisations must stop treating AI as a "black box" that does everything. Instead, they should integrate specific technologies – AI where appropriate, and rigid automation where necessary – into a secure platform environment.

A robust reporting ecosystem relies on three distinct pillars:

Before any advanced tools can be applied, the underlying data must be unimpeachable. If your numbers are static text pasted from Excel, neither AI nor automation can verify their accuracy.

This is where CtrlPrint Integrate creates the necessary foundation. By establishing a direct link between the source data (ERP systems or master spreadsheets) and the reporting document (InDesign or Word), you ensure that the numbers are always automatically updated. This is the deterministic framework that ensures when a figure changes in the source, it updates in the report, preserving the single source of truth.

Once the data foundation is secure, we can deploy Generative AI where it shines most: saving time, in this case reviewing large volumes of text.

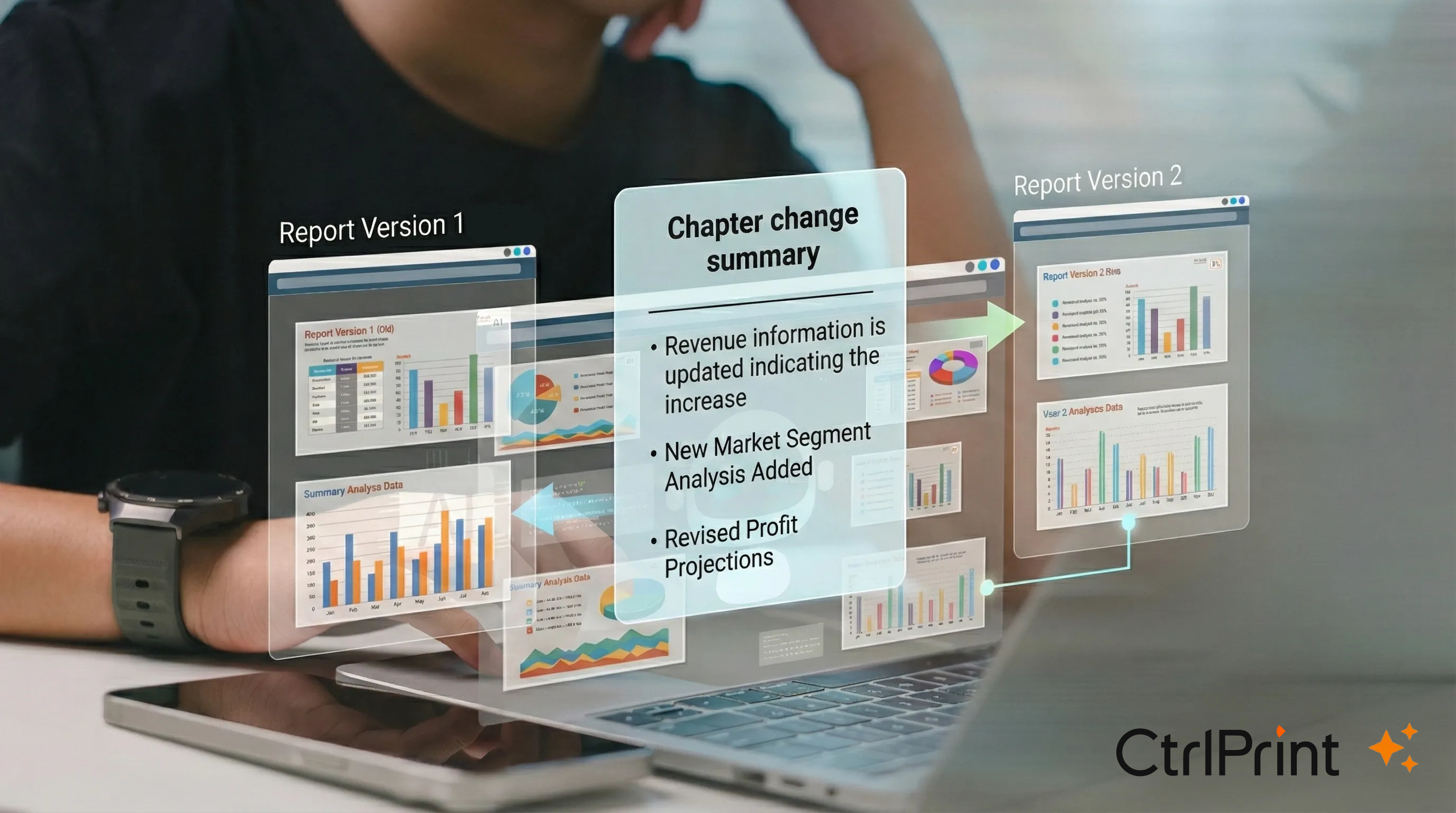

Reviewing a 200-page annual report for minor edits is a poor use of a CFO’s time. This is the domain of the Change Summary feature. Operating within the secure boundary of the platform, this AI capability scans different versions of the report and generates a natural language summary of the material changes.

Unlike a simple "diff" tool that flags every comma, Change Summary acts like a human assistant, highlighting meaningful updates. It allows reviewers to validate the narrative evolution without getting lost in the noise.

.png?width=2752&height=1536&name=Group%202%20(1).png)

Finally, there is the "last mile" of reporting: tagging. While there is a temptation to label everything as AI, the regulatory world requires precision, not probability. You do not want an AI "guessing" your taxonomy.

CtrlPrint XBRL Tagger streamlines the reporting process by centering on the professional judgment of your accounting and consolidation experts, who select the XBRL tags that best represent the company's financial data. As these selections are made, the tool automatically preserves the tags and their defined attributes, creating a complete version history that remains available for future reporting cycles. This persistence ensures that previously used tags are ready for the next period, maintaining consistency and saving time. Additionally, the tool features integrated artificial intelligence that provides tagging suggestions to assist users, which is especially effective for accelerating the workflow during the initial tagging phase.

The "black box" era of financial reporting is incompatible with modern governance standards. As reporting requirements grow to include more non-financial data (CSRD, ESRS), the complexity will exceed human-only processing speeds.

However, the winners in this new landscape will not be the companies that blindly apply AI to every process. They will be the companies that understand the difference between generative creation and structural compliance. By integrating these distinct technologies into a seamless, secure environment, organisations can ensure that innovation never comes at the cost of control.

The future of the annual report is not just digital; it is dynamic. But it must always be defensible.

.jpg)

The initial hype surrounding artificial intelligence in corporate finance has settled. We have moved past the "wow" phase of generative text and are...

For years, the phrase "internal controls" lived deep within audit committee papers, while "digital tagging" was viewed as a technical after-thought...

For corporate reporting leaders, the annual sustainability report is the ultimate balancing act: you are juggling rigorous ESG data and the need to...

This checklist provides a concise, strategic reference designed for reporting teams to proactively identify, mitigate, and govern the nine most...

Reviewing the changes between versions can take up considerable time. Version number has changed, but what is actually new? Keeping track of progress...

[wistia_video]